Who are you, in a sentence?

I’m Alexander Nachaj, PhD, a digital marketer (founder of Acorn Digital Consulting), author, and academic with a background in teaching and publishing in religion/culture/media.

What do you do professionally right now?

I run a boutique digital marketing practice through Acorn Digital Consulting and help organizations improve performance across channels like paid search, SEO, analytics, and conversion-focused strategy. I also write.

What kinds of clients do you work with?

Typically businesses, organizations, and institutions that want measurable growth, tighter tracking, and more efficient marketing spend (especially where teams need a strategic partner, not just execution). This often includes higher education, service providers, and SaaS.

What marketing services do you offer?

Common engagements include:

- Digital marketing strategy + audits

- Google Ads / paid media consulting

- SEO + content planning

- Analytics setup and reporting (measurement that actually answers business questions)

- Landing page and funnel optimization (CRO)

Do you do copywriting?

Yes. I take on select copywriting projects when the scope is clear (web pages, landing pages, positioning, and conversion-focused messaging).

Can I hire you for a one-off consult?

Yes. If you have a specific problem (e.g., “our ads aren’t converting,” “we don’t trust our tracking,” “we need an SEO audit”), a focused consult is often the fastest way to get you where you need to be.

What’s your academic background?

I earned my PhD at Concordia University (2021). I taught in Religions & Cultures and also did technical-writing tutorials for Engineering, alongside editorial work in academic publishing.

What do you research / write about academically?

My academic interests center on American religion, media, celebrity, and masculinity.

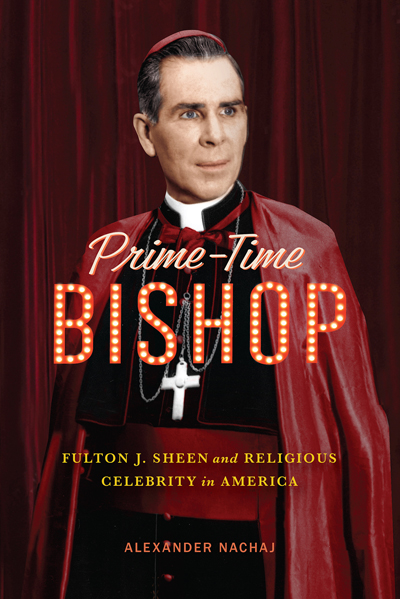

What is Prime-Time Bishop?

Prime-Time Bishop: Fulton J. Sheen and Religious Celebrity in America is my first academic monograph (McGill-Queen’s University Press), released in 2025.

Where can I find your publications, articles, and writing?

Your best starting point is my Bibliography page, which links out to:

- Academic publications

- Blogs / articles / guest posts

- Creative writing

What kind of creative work do you write?

My fiction leans mostly into the speculative and surreal. I’ve also written screenplays recognized at festivals. A full list lives on my Creative Writing page.

I saw broken links in your bibliography. What’s up with that?

Sometimes older web publications move or disappear over time. I try to keep a master list to make discovery easier even when the internet loses things, but I can’t always win.

Are you available for speaking, workshops, or guest lectures?

Often, yes, especially on topics like digital marketing strategy, measurement/analytics, SEO/content systems, and (academically) religion/media/celebrity/history. The easiest way is to message me with your date, format, audience, and topic idea.

What’s the best way to contact you?

At the moment, LinkedIn is preferable. But you can also check my Contact page for more ways.